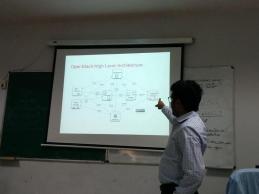

Glance’s role in Open Stack

Glance provides a service where users can upload and discover data assets that are meant to be used with other services like images of Nova and Templates of Heat.

What are images?

A virtual machine image abbreviated as ‘image’ is a single file which contains a virtual disk that has a bootable operating system installed on it.

OUTLINE

End users would like to bring their own images into your cloud but there are some complications associated with it.

COMPLICATIONS

- Some end users might not understand what the OpenStack image service is.

- People might not be aware of what format an image is of for eg. someone might want to upload a JPEG but from JPEG one cannot boot a VM.

- Some end users know what image service is but they intentionally upload malicious images with other users in hope of putting the program to backdoors.

- Some end users may upload a malicious image to try and attack the hypervisor itself which is possible and unsavory.

- Some end users have really slow connections and images.

- Usually it takes some time for images to upload and lots of slow long running uploads can tie up the image service.

- Image service is kind of important for nova as it uses image service to boot VM.

How to get information back to users?

Image status field is not very descriptive and since the uploaded thing might not actually be a VM image, so the question is; do we really want to create an image which is actually not an image?

One suggestion is that; you can just do the normal image create process; it would just be queued and eventually it will reach and realize it’s not an image and kill it; it would be frustrating to wait for so long and not getting it right.

There is a need to find a way for end users so that

- The uploaded data can be verified as VM image.

- The image can be scanned for malware or exploits.

- Uses an interface that is common across OpenStack installations.

- Even after image upload, it is yet customizable because different clouds uses different technologies and different hypervisors, etc

- Using customizable exploit is something like you learn about a new exploit and put something in it without waiting for the 6 months cycle for liberty.

- It should provide useful feedback to the end user but the key thing is that it would be nice some cover for the image i.e message that your image is killed or something if upload fails. So we need a feedback.

- End users may want to download images to move them to another cloud for various reasons.

- A provider may want to pre-process an image before it is handed over to the end user.

- End user may have a slow connection that may slow down glance connections.

Glance is dealing with another long running asynchronous image related activity, this area also needs be handled.

Role of Glance Tasks

- It provides a common API across open Stack installations .

- Workflow of actual operation is customizable per cloud provider.

- Doesn’t creates images until there is a high probability of success.

- Provides a way to deliver meaningful helpful error messages, this depends on how right you are doing things.Point is, there is a way to do it and however nice the exploration is built into these things, so that the task object that you create once it reaches the final state getting expire at tag and is not configurable.

- It free’s the normal upload/download path for trusted users.

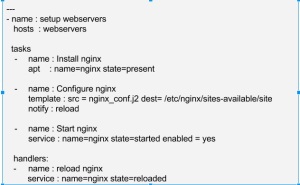

Creating the tasks using import method

To use the Tasks API, we need to use cURL, cURL is a software package which consists of command line tool and a library for transferring data using URL syntax. “cURL” supports various protocols like, DICT, FILE, FTP, FTPS, Gopher, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, POP3, POP3S, RTMP, RTSP, SCP, SFTP, SMTP, SMTPS, Telnet and TFTP.

First of all, we need to source the openrc, for that do, source openrc admin admin.

Well, the fastest way to get a curl command is by using command glance –debug image-list , this will give us a list of images with a curl command, something like this-

curl -g -i -X GET -H ‘Accept-Encoding: gzip, deflate’ -H ‘Accept: */*’ -H ‘User-Agent: python-glanceclient’ -H ‘Connection: keep-alive’ -H ‘X-Auth-Token: {SHA1}74fe76ffe176eb077ef4975a2df56c6af0fa5f28’ -H ‘Content-Type: application/octet-stream’ http://10.0.2.15:9292/v1/images/detail?sort_key=name&sort_dir=asc&limit=20

The above can also be written as

curl -g -i \

-H ‘Accept-Encoding: gzip, deflate’ \

-H ‘Accept: */*’ \

-H ‘User-Agent: python-glanceclient’ \

-H ‘Connection: keep-alive’ \

-H ‘X-Auth-Token: 709d7c24021d4366b8c3df04ce44e3e6’ \

-H ‘Content-Type: application/json’ \

-d ‘{“type”: “import”, “input”: {“import_from”: “https://launchpad.net/cirros/trunk/0.3.0/+download/cirros-0.3.0-x86_64-disk.img”, “import_from_format”: “raw”, “image_properties” : {“name”: “test-conversion-1”, “container_format”: “bare”}}}’ \

-X POST http://10.0.2.15:9292/v2/tasks

- -H stands for the headers and these are necessary for the proper functioning of cURL.

- -X is for the HTTP method, it can be GET, POST, DELETE or PUT.

- -d stands for data and is, the data can be in the JSON, XML, etc, data is supposed to be wrapped in single quotes.

The curl command we got from glance debug image-list has –X POST in the beginning, but it doesn’t matters; it’s just that, the above cURL command is more organized and nothing else.

The authorized token above is different for each user and is valid for specific amount of time, so we need to get a authorized token every time we try to use any service of Open Stack. For authorization do keystone token-get , copy paste the token id in the Auth-Token header.

In the header, Content-Type , replace “octet stream” by “json” because tasks API uses json schema. The schema looks something like this

{ “type”: “import”,

“input”: {

“import_from”: “swift://cloud.foo/myaccount/mycontainer/path”,

“import_from_format”: “qcow2”,

“image_properties” : {

“name”: “GreatStack 1.22”,

“tags”: [“lamp”, “custom”]

}

}

}

The data is to be wrapped in single quotes and in single quotes and is a “Json” schema. Then comes the –X which means the HTTP method which in this case is a POST .

Cheers!!